Lightbits provides a software-defined storage solution that leverages commodity servers to deliver flexible, scalable, and high-performance storage capabilities. To optimize the deployment of a Lightbits cluster, it is essential to follow the best practices detailed here. The subsequent sections discuss these practices in-depth, focusing on optimal hardware configurations, network setups, and performance enhancements.

Hardware Requirements

Servers

CPU

The performance of a Lightbits cluster is closely tied to the CPU capabilities of the host servers. For optimal processing power, it is advisable to deploy servers with dual-socket CPUs that feature a high core count; a minimum of 32 physical cores with Hyper-Threading (HT) enabled is recommended. This requirement stems from how Lightbits software dedicates cores to specific tasks within its stack, including:

- Readers

- Writers

- ETCD

- Metadata management

- Recovery processes

- Replication tasks

Additionally, the type and speed of the CPU significantly impact performance. For instance, modern Intel CPUs such as Emerald Rapids and Sapphire Rapids offer superior performance compared to older generations such as Ice Lake or Cascade Lake.

Memory

RAM requirements are directly influenced by the number of SSD drives and their capacities. More drives or larger capacities will necessitate increased memory to manage the higher throughput and data management overhead - such as compression and metadata. Lightbits has a memory calculator that can assist customers with the correct amount of memory for any deployment type.

Data SSD Drives

While the type of SSD drive can influence performance, opting for SLC or MLC drives - which are often more expensive - is not mandatory. TLC or QLC drives, especially those with larger capacities, can also achieve high performance. Lightbits' Intelligent Flash Management layer efficiently optimizes I/O for these high-capacity drives, ensuring effective performance without the added cost.

Journaling SSD Drives

As the journaling SSD devices are in the path of the write latency and bandwidth, it is recommended to user high-performance low-latency drives with high endurance. Opting for SLC or MLC drives - which are often more expensive - is recommended. While we support one to four journaling devices per node, in most cases installing two drives will provide good performance.

QLC Drives

Although Lightbits recommends TLC drives, we support QLC drives as well. However, please note that certain QLC models could experience performance degradation over time. Therefore, we recommend checking the vendor and model of QLC drives in advance with Lightbits Support / Sales Engineers.

Network Configuration

Ethernet Interfaces

For optimal performance, servers should be equipped with multiple Ethernet network interfaces. Speeds of these interfaces play a critical role in performance, with 100Gb Ethernet recommended to handle the high data throughput requirements of modern applications. See the Networking Best Practices section below for additional information - including the advanced configuration for NIC bonding.

Cluster Configuration

Node Count

Number of Nodes

For production environments, deploying a minimum of four nodes is crucial to ensuring high availability and robust fault tolerance. This setup not only facilitates load distribution across multiple nodes, but also introduces redundancy - which significantly reduces the risk of downtime and data loss.

In terms of volume management, adopting a replication factor of 3 (RF3) is highly recommended. With RF3, each volume is replicated across three nodes, ensuring that even if one node fails, the volume remains fully operational and does not enter a degraded state. In a four-node cluster, this means that despite the failure of a single node, three nodes will continue to support the RF3 configuration, maintaining the integrity and availability of your data.

While RF3 is highly recommended, it is not a requirement. RF2 or even RF1 can be utilized on the Lightbits cluster as long as the front-end applications or databases have high availability (HA) capabilities. Applications like Cassandra or MongoDB are designed with their own high availability (HA) mechanisms to eliminate single points of failure within the database. This allows them to effectively use volumes with a replication factor of 1 (RF1), ensuring continued operation without downtime - even if a component fails.

Performance Tuning

Numa Nodes

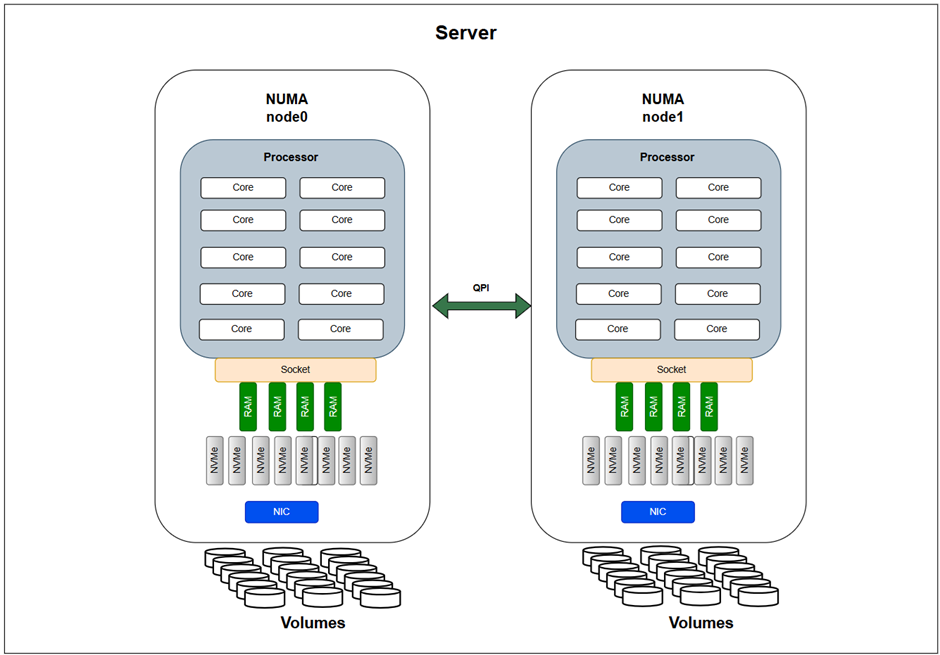

For optimal performance, configuring servers to utilize dual NUMA nodes is recommended. This configuration enhances memory access efficiency in multi-processor systems, resulting in more effective processing and quicker data handling. Each NUMA node is equipped with its own dedicated set of NVMe drives, RAM, and an Ethernet Network Interface Card (NIC), which isolates the storage and network operations to specific nodes. By aligning Lightbits volumes to specific NUMA nodes, this setup avoids cross-NUMA traffic, which can significantly degrade performance.

The following diagram illustrates this configuration.

NUMA Nodes Architecture

Number of Volumes

To maximize the performance of a Lightbits cluster, it is beneficial to create multiple volumes on each NUMA node across all servers within the cluster. This approach leverages Lightbits' unique architecture, which is designed to optimize performance through the distribution of workloads (see the diagram above). Although configuring multiple volumes will yield higher performance, utilizing a single large volume is also a viable option.

Unlike traditional storage solutions from other vendors, where performance could degrade with the addition of more volumes, the Lightbits system maintains or even enhances performance and consistent low latency as more volumes are created. This distinct advantage is due to Lightbits' efficient management of I/O operations and data placement strategies, which ensure that additional volumes contribute to balanced load distribution and enhanced data access speeds.

Creating a higher number of volumes across the NUMA nodes enables better parallel processing and improves redundancy, further solidifying the performance and reliability of the storage cluster.

Networking Best Practices

Interface Configuration

Management Interface

For streamlined management of the Lightbits cluster, it is advisable to dedicate one network interface solely for management access. This ensures that administrative traffic does not interfere with data handling processes.

Data Path Interfaces

To maximize data throughput and performance, deploying at least two 100Gb Ethernet interfaces for the data path is recommended, particularly in a dual NUMA node configuration where each NUMA node should have its own dedicated interface. For optimal performance, it is advisable to use dual-port Ethernet interfaces, as they offer both higher throughput and redundancy. For clusters with a single-instance configuration, a single dual-port 100Gb Ethernet interface could suffice - although dual interfaces are preferable for enhanced performance.

Performance Considerations

The choice between single and dual Ethernet interfaces should be guided by the desired performance levels and potential network bottlenecks. Ethernet interfaces are often the first components to experience saturation. Adequately planning the network setup can prevent these bottlenecks and ensure consistent, high-speed data transfer.

Advanced Configurations

Bonding

In scenarios where multiple NICs are used, implementing a bonding configuration can provide additional benefits. Bonding multiple network interfaces can increase the available bandwidth and provide failover redundancy, significantly enhancing fault tolerance and network reliability. By carefully configuring network interfaces and considering advanced setup options such as bonding, you can significantly improve the performance and resilience of your Lightbits storage cluster.

Conclusion

Deploying a Lightbits cluster with the right hardware and configurations can significantly enhance storage performance and reliability. By following these best practices, organizations can build robust storage solutions that more effectively support their operational and business needs.