Title

Create new category

Edit page index title

Edit category

Edit link

Volume Placement

With Volume Placement, you can specify which failure domain to place a volume on upon its creation. The idea behind volume placement is to statically define, separate, and manage how volumes and data can tolerate a failure and provide availability - through failure of a server, rack, row, power grid, etc. By default, Lightbits will define server level failure domains. To define custom failure domains, see Failure Domains.

Note that this feature is associated with the create volume command.

Current feature limitations of Volume Placement include the following:

- Available only for single replica volumes.

- Only failure domains labels are currently matched (e.g., fd:server00).

- Up to 25 affinities can be specified.

- Value (failure domain name) is limited to up to 100 characters.

- Volume Placement cannot be specified for a clone (a clone is always placed on the same nodes as the parent volume/snapshot).

- Dynamic Rebalancing must be disabled.

- The entire Lightbits cluster must be upgraded to at least release version 2.3.8.

In Lightbits 2.3.8 and above, a new flag is available - 'placement-affinity' - which can be used as follows:

lbcli create volume

lbcli -J $JWT create volume ----name=vol1 --acl=acl1 --size="4 Gib" --replica-count=1 --placement-affinity="fd:Server0|fd:rack1|fd:rack0"In the example above, you can ask the system to place the volume as follows:

- On a node that includes Server0 in its failure domains. Note that the server name is used as a default failure domain configuration in node-manager.yaml. You will be responsible for determining if you want anything else other than the default yaml that Lightbits provides.

- On a node that includes rack1 in its failure domains.

- On a node that includes rack0 in its failure domains.

If the requirement cannot be satisfied because Lightbits did not find such a node, Lightbits will fail the request.

If Lightbits cannot find active nodes with failure domains that match the volume placement request, create volume will fail and will not place the volume on other nodes.

Volume Assignments

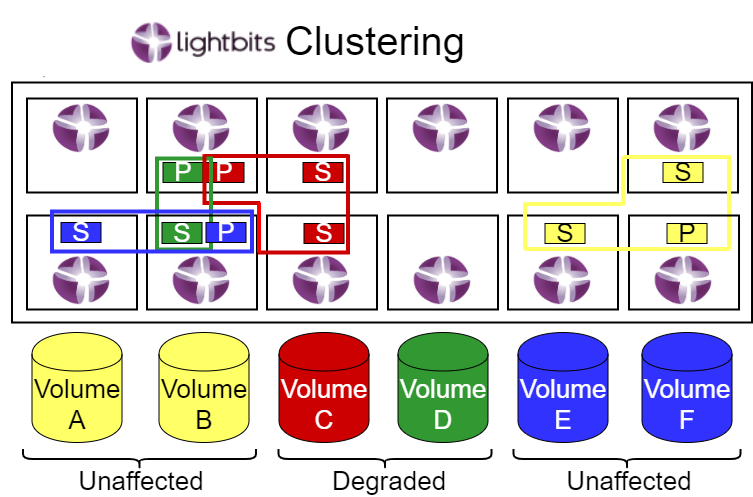

Lightbits provides the following levels of protection for the volumes in a cluster:

- Replication Factor 1 / RF1: Volumes are stored on a single storage node.

- Replication Factor 2 / RF2: Volumes are stored on two storage nodes.

- Replication Factor 3 / RF3: Volumes are stored on three storage nodes.

For Replication Factor 2 and 3, one of the storage nodes behaves as a primary (P) node for this volume, and the other volume’s storage nodes behave as secondary (S) nodes. Replication Factor 1 only has a primary node.

Each storage node that stores data of multiple volumes can act as a primary node of one volume or as a secondary node of another volume. A primary node appears in the accessible path of the client, handles all user IO requests, and replicates data to the secondary nodes. If a primary node fails, the NVMe/TCP multipath feature changes the accessible path and reassigns the primary replica to another node.

When a user creates a volume, Lightbits transparently selects the nodes that hold the volume’s data and configures the primary and secondary roles. The node selection logic balances the volumes between nodes upon volume creation.

© 2026 Lightbits Labs™